Benchmarking Huggingface

Let’s try a language mode with HuggingFace

import torch

from transformers import Wav2Vec2Processor, Wav2Vec2ForCTC

from datasets import load_dataset

torch.set_float32_matmul_precision('high')

def run_hf_inference(model, input_values):

# retrieve logits

logits = model(input_values).logits

# take argmax and decode

predicted_ids = torch.argmax(logits, dim=-1)

transcription = processor.batch_decode(predicted_ids)

# load model and processor

processor = Wav2Vec2Processor.from_pretrained("facebook/wav2vec2-large-960h-lv60-self")

model = Wav2Vec2ForCTC.from_pretrained("facebook/wav2vec2-large-960h-lv60-self").cuda()

# load dummy dataset and read soundfiles

ds = load_dataset("patrickvonplaten/librispeech_asr_dummy", "clean", split="validation")

# tokenize

input_values = processor(ds[0]["audio"]["array"], return_tensors="pt", padding="longest").input_values.cuda()

batch = 1

torch._dynamo.reset()

compiled_model = torch.compile(model, mode='max-autotune')

t_model = benchmark.Timer(

stmt='run_hf_inference(model, input_values)',

setup='from __main__ import run_hf_inference',

globals={'model': model, 'input_values':input_values})

t_compiled_model = benchmark.Timer(

stmt='run_hf_inference(model, input_values)',

setup='from __main__ import run_hf_inference',

globals={'model': compiled_model, 'input_values':input_values})

t_model_runs = t_model.timeit(100)

t_compiled_model_runs = t_compiled_model.timeit(100)

print(f"\nHuggingface Inference speedup: {100*(t_model_runs.mean - t_compiled_model_runs.mean) / t_model_runs.mean: .2f}%")

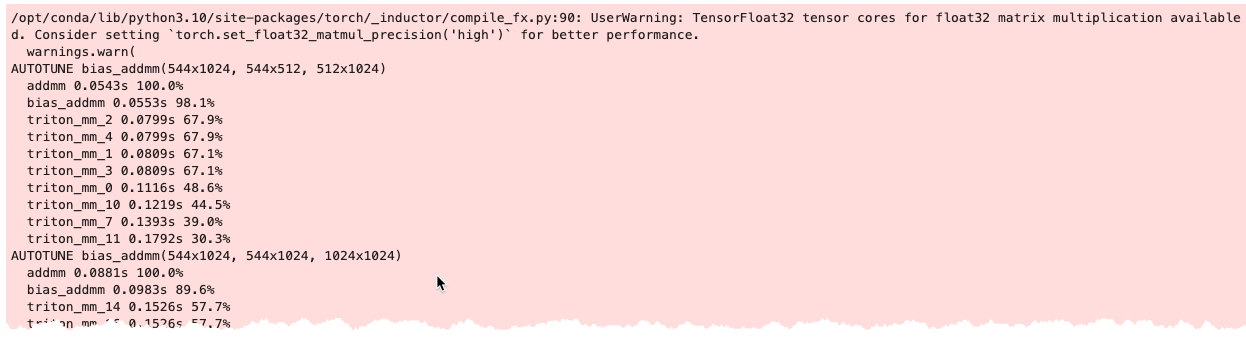

Output: You should see an output like this showing auto-tune in action where the compiler is running multiple CUDA kernels to determine the most performant one.

You’ll be trading off slower compile time for potentially faster training time.

What speedup do you see? Discuss!

Huggingface Inference speedup: X.XX%