A Tour of PyTorch 2.0

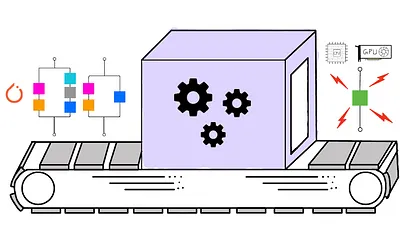

Abstract: Learn about PyTorch 2.0 with a deep dive into the technology stack that powers the new torch.compile() API: TorchDynamo, AITAutograd, and TorchInductor. The new compiler stack reduces training times across a wide range of workloads while being fully backwards compatible. Bring your laptops, or connect to remote GPU powered systems and learn how to compile your code, profile and debug to improve performance.

Learning objectives

At the end of this tutorial you’ll be able to:

- Describe the advantages of using torch.compile()

- Demonstrate how to use torch.compile() to speed up PyTorch

- Explain what happens under the hood when you call torch.compile()

- List different Intermediate Representations (IR) in Pytorch

- Recognize the importance of operator fusion and code generation

Agenda

| Topics | Duration |

|---|---|

| Setup and getting started | 10 mins |

| What’s New in PyTorch 2.0 | 10 mins |

Hand’s On: Simple benchmarks with torch.compile() in PyTorch 2.0 | 20 mins |

Hand’s On: What happens under the hood when you call torch.compile() ? | 15 mins |

Hand’s On: Profiling pytorch code with torch.profiler and inspecting the compiler process | 15 mins |

| Resources + Q&A | 10 mins |