What is an AI accelerator?

An AI accelerator is a dedicated processor designed to accelerate machine learning computations. Machine learning, and particularly its subset, deep learning is primarily composed of a large number of linear algebra computations, (i.e. matrix-matrix, matrix-vector operations) and these operations can be easily parallelized. AI accelerators are specialized hardware designed to accelerate these basic machine learning computations and improve performance, reduce latency and reduce cost of deploying machine learning based applications.

Why do we need specialized AI accelerators?

The two most important reasons for building dedicated processors for machine learning are:

- Energy efficiency

- Faster performance

Recent trends to improve model accuracy, have been to introduce larger models with more parameters and train them on larger data sets. As model sizes get larger, and current processors won’t be able to deliver the processing power needed to train or run inference on these models under tight time-to-train and inference latency requirements.

AI accelerators on the other hand can be designed with features to minimize memory access, offer larger on-chip cache and include dedicated hardware features to accelerate matrix-matrix computations. Since AI accelerators are purpose built devices it is “aware” of the algorithms that it runs on and its dedicated features will run it more efficiently than a general purpose processor.

List of popular AI accelerators for training

- NVIDIA GPUs: Available on AWS, GCP, Azure and at your local computer store (See my recommendation list on the left menu)

- AWS Tranium: Available on AWS

- Intel Habana Gaudi: Available on AWS (v1) and Intel DevCloud (v1 and v2)

- Google Cloud TPUs: Available on GCP and via Colab (v1-v4)

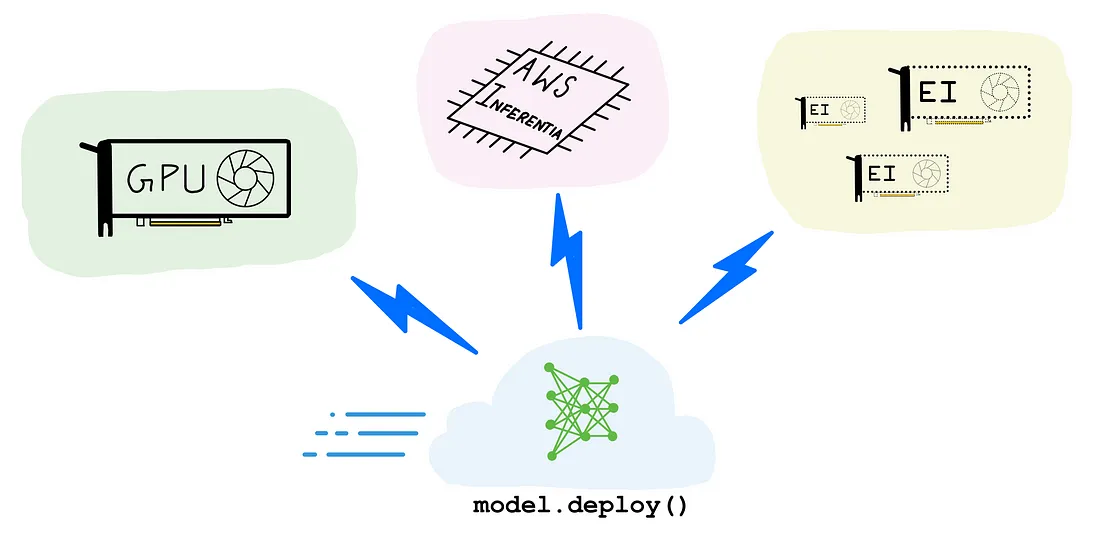

List of popular AI accelerators for inference

- NVIDIA GPUs: Available on AWS, GCP, Azure (See my recommendation list on the left menu)

- AWS Inferentia: Available on AWS (See my recommend blog post below)

- Intel Habana Gaudi: Available on AWS and Intel DevCloud (v1 and v2)

- Google Cloud TPUs: Available on GCP and via Colab (v1-v4)