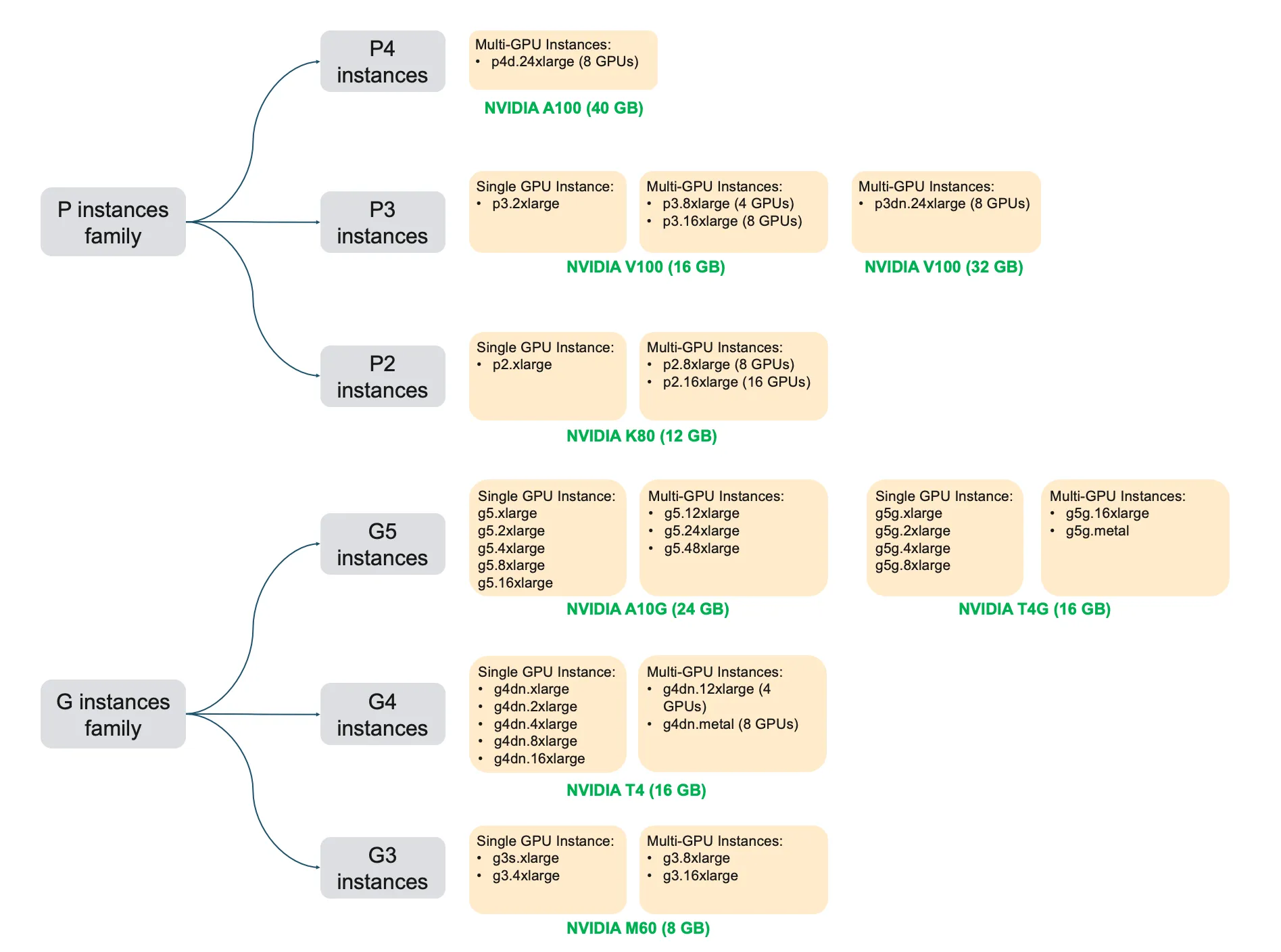

AWS GPU instances complete list

A complete list of all Amazon EC2 GPU instance types on AWS that I’ve painstakenly compiled, because you can’t find this information anywhere in AWS docs

Here is a complete list of all Amazon EC2 GPU instance types on AWS that I’ve painstakenly compiled, because you can’t find this information anywhere on AWS. In the tabular format below, you’ll find more detailed information about GPU type, interconnect, Thermal design power (TDP), precision types supported etc.

Tabular format

With more information than you were probably looking for 😊

| Architecture | NVIDIA GPU | Instance type | Instance name | Number of GPUs | GPU Memory (per GPU) | GPU Interconnect (NVLink / PCIe) | Thermal Design Power (TDP) from nvidia-smi | Tensor Cores (mixed-precision) | Precision Support | CPU Type | Nitro based |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Ampere | A100 | P4 | p4d.24xlarge | 8 | 40 GB | NVLink gen 3 (600 GB/s) | 400W | Tensor Cores (Gen 3) | FP64, FP32, FP16, INT8, BF16, TF32 | Intel Xeon Scalable (Cascade Lake) | Yes |

| Ampere | A10G | G5 | g5.xlarge | 1 | 24 GB | NA (single GPU) | 300W | Tensor Cores (Gen 3) | FP64, FP32, FP16, INT8, BF16, TF32 | AMD EPYC | Yes |

| Ampere | A10G | G5 | g5.2xlarge | 1 | 24 GB | NA (single GPU) | 300W | Tensor Cores (Gen 3) | FP64, FP32, FP16, INT8, BF16, TF32 | AMD EPYC | Yes |

| Ampere | A10G | G5 | g5.4xlarge | 1 | 24 GB | NA (single GPU) | 300W | Tensor Cores (Gen 3) | FP64, FP32, FP16, INT8, BF16, TF32 | AMD EPYC | Yes |

| Ampere | A10G | G5 | g5.8xlarge | 1 | 24 GB | NA (single GPU) | 300W | Tensor Cores (Gen 3) | FP64, FP32, FP16, INT8, BF16, TF32 | AMD EPYC | Yes |

| Ampere | A10G | G5 | g5.16xlarge | 1 | 24 GB | NA (single GPU) | 300W | Tensor Cores (Gen 3) | FP64, FP32, FP16, INT8, BF16, TF32 | AMD EPYC | Yes |

| Ampere | A10G | G5 | g5.12xlarge | 4 | 24 GB | PCIe | 300W | Tensor Cores (Gen 3) | FP64, FP32, FP16, INT8, BF16, TF32 | AMD EPYC | Yes |

| Ampere | A10G | G5 | g5.24xlarge | 4 | 24 GB | PCIe | 300W | Tensor Cores (Gen 3) | FP64, FP32, FP16, INT8, BF16, TF32 | AMD EPYC | Yes |

| Ampere | A10G | G5 | g5.48xlarge | 8 | 24 GB | PCIe | 300W | Tensor Cores (Gen 3) | FP64, FP32, FP16, INT8, BF16, TF32 | AMD EPYC | Yes |

| Turing | T4G | G5 | g5g.xlarge | 1 | 16 GB | NA (single GPU) | 70W | Tensor Cores (Gen 2) | FP32, FP16, INT8 | AWS Graviton2 | Yes |

| Turing | T4G | G5 | g5g.2xlarge | 1 | 16 GB | NA (single GPU) | 70W | Tensor Cores (Gen 2) | FP32, FP16, INT8 | AWS Graviton2 | Yes |

| Turing | T4G | G5 | g5g.4xlarge | 1 | 16 GB | NA (single GPU) | 70W | Tensor Cores (Gen 2) | FP32, FP16, INT8 | AWS Graviton2 | Yes |

| Turing | T4G | G5 | g5g.8xlarge | 1 | 16 GB | NA (single GPU) | 70W | Tensor Cores (Gen 2) | FP32, FP16, INT8 | AWS Graviton2 | Yes |

| Turing | T4G | G5 | g5g.16xlarge | 2 | 16 GB | PCIe | 70W | Tensor Cores (Gen 2) | FP32, FP16, INT8 | AWS Graviton2 | Yes |

| Turing | T4G | G5 | g5g.metal | 2 | 16 GB | PCIe | 70W | Tensor Cores (Gen 2) | FP32, FP16, INT8 | AWS Graviton2 | Yes |

| Turing | T4 | G4 | g4dn.xlarge | 1 | 16 GB | NA (single GPU) | 70W | Tensor Cores (Gen 2) | FP32, FP16, INT8 | Intel Xeon Scalable (Cascade Lake) | Yes |

| Turing | T4 | G4 | g4dn.2xlarge | 1 | 16 GB | NA (single GPU) | 70W | Tensor Cores (Gen 2) | FP32, FP16, INT8 | Intel Xeon Scalable (Cascade Lake) | Yes |

| Turing | T4 | G4 | g4dn.4xlarge | 1 | 16 GB | NA (single GPU) | 70W | Tensor Cores (Gen 2) | FP32, FP16, INT8 | Intel Xeon Scalable (Cascade Lake) | Yes |

| Turing | T4 | G4 | g4dn.8xlarge | 1 | 16 GB | NA (single GPU) | 70W | Tensor Cores (Gen 2) | FP32, FP16, INT8 | Intel Xeon Scalable (Cascade Lake) | Yes |

| Turing | T4 | G4 | g4dn.16xlarge | 1 | 16 GB | NA (single GPU) | 70W | Tensor Cores (Gen 2) | FP32, FP16, INT8 | Intel Xeon Scalable (Cascade Lake) | Yes |

| Turing | T4 | G4 | g4dn.12xlarge | 4 | 16 GB | PCIe | 70W | Tensor Cores (Gen 2) | FP32, FP16, INT8 | Intel Xeon Scalable (Cascade Lake) | Yes |

| Turing | T4 | G4 | g4dn.metal | 8 | 16 GB | PCIe | 70W | Tensor Cores (Gen 2) | FP32, FP16, INT8 | Intel Xeon Scalable (Cascade Lake) | Yes |

| Volta | V100 | P3 | p3.2xlarge | 1 | 16 GB | NA (single GPU) | 300W | Tensor Cores (Gen 1) | FP64, FP32, FP16 | Intel Xeon (Broadwell) | No |

| Volta | V100 | P3 | p3.8xlarge | 4 | 16 GB | NVLink gen 2 (300 GB/s) | 300W | Tensor Cores (Gen 1) | FP64, FP32, FP16 | Intel Xeon (Broadwell) | No |

| Volta | V100 | P3 | p3.16xlarge | 8 | 16 GB | NVLink gen 2 (300 GB/s) | 300W | Tensor Cores (Gen 1) | FP64, FP32, FP16 | Intel Xeon (Broadwell) | No |

| Volta | V100* | P3 | p3dn.24xlarge | 8 | 32 GB | NVLink gen 2 (300 GB/s) | 300W | Tensor Cores (Gen 1) | FP64, FP32, FP16 | Intel Xeon (Skylake) | Yes |

| Kepler | K80 | P2 | p2.xlarge | 1 | 12 GB | NA (single GPU) | 149W | No | FP64, FP32 | Intel Xeon (Broadwell) | No |

| Kepler | K80 | P2 | p2.8xlarge | 8 | 12 GB | PCIe | 149W | No | FP64, FP32 | Intel Xeon (Broadwell) | No |

| Kepler | K80 | P2 | p2.16xlarge | 16 | 12 GB | PCIe | 149W | No | FP64, FP32 | Intel Xeon (Broadwell) | No |

| Maxwell | M60 | G3 | g3s.xlarge | 1 | 8 GB | PCIe | 150W | No | FP32 | Intel Xeon (Broadwell) | No |

| Maxwell | M60 | G3 | g3.4xlarge | 1 | 8 GB | PCIe | 150W | No | FP32 | Intel Xeon (Broadwell) | No |

| Maxwell | M60 | G3 | g3.8xlarge | 2 | 8 GB | PCIe | 150W | No | FP32 | Intel Xeon (Broadwell) | No |

| Maxwell | M60 | G3 | g3.16xlarge | 4 | 8 GB | PCIe | 150W | No | FP32 | Intel Xeon (Broadwell) | No |