A complete guide to AI accelerators for deep learning inference — GPUs, AWS Inferentia and Amazon Elastic Inference

Let’s start by answering the question “What is an AI accelerator?”

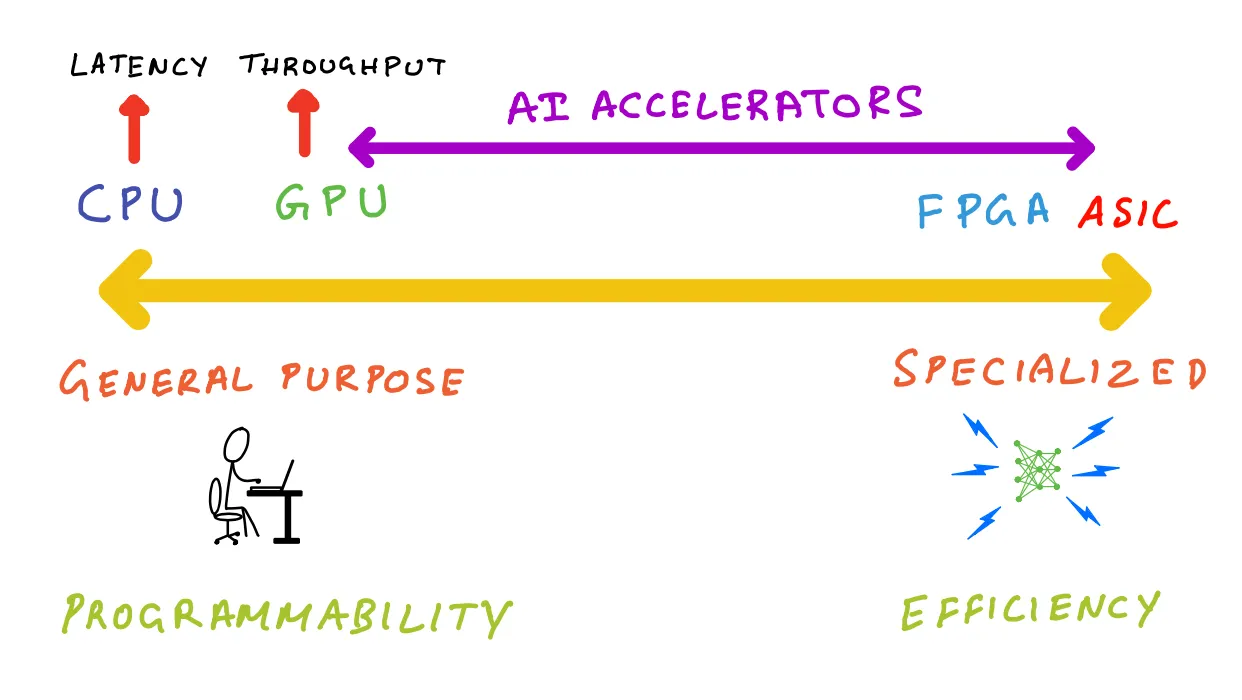

An AI accelerator is a dedicated processor designed to accelerate machine learning computations. Machine learning, and particularly its subset, deep learning is primarily composed of a large number of linear algebra computations, (i.e. matrix-matrix, matrix-vector operations) and these operations can be easily parallelized. AI accelerators are specialized hardware designed to accelerate these basic machine learning computations and improve performance, reduce latency and reduce cost of deploying machine learning based applications.

Do I need an AI accelerator for machine learning (ML) inference?

Let’s say you have an ML model as part of your software application. The prediction step (or inference) is often the most time consuming part of your application that directly affects user experience. A model that takes several hundreds of milliseconds to generate text translations or apply filters to images or generate product recommendations, can drive users away from your “sluggish”, “slow”, “frustrating to use” app. By speeding up inference, you can reduce the overall application latency and deliver an app experience that can be described as “smooth”, “snappy”, and “delightful to use”. And you can speed up inference by offloading ML model prediction computation to an AI accelerator. With great market needs comes great many product alternatives, so naturally there is more than one way to accelerate your ML models in the cloud. In this blog post, I’ll explore three popular options:

- GPUs: Particularly, the high-performance NVIDIA T4 and NVIDIA V100 GPUs

- AWS Inferentia: A custom designed machine learning inference chip by AWS

- Amazon Elastic Inference (EI): An accelerator that saves cost by giving you access to variable-size GPU acceleration, for models that don’t need a dedicated GPU