Shashank Prasanna

Developer Relations Leader | AI/ML Technical Expert | Developer Marketing Strategist | Technology EducatorHi!👋🏽 I’m Shashank — I build, grow, and inspire AI/ML developer communities around the world.

With over 15 years leading developer relations and marketing programs at Apple, NVIDIA, AWS, Meta, Modular AI and MathWorks, I’ve helped thousands of developers adopt new AI/ML frameworks, AI cloud services and infrastructure, on-device AI frameworks, and AI accelerators for training and inference.

I’m a hands-on AI/ML DevRel strategist who specializes in building engagement frameworks that guide developers through the entire lifecycle—generating awareness, driving deep technical evaluation, and high-touch adoption, with a proven track record at Apple, NVIDIA, and AWS.

Beyond strategy, I am a hands-on technical educator, known for delivering high-quality and deeply technical talks, running hands-on workshops, consulting developers on AI/ML best practices, writing developer messaging, and launching developer-first products that make advanced AI/ML frameworks, libraries and tools more accessible. I’ve built global developer engagement strategies and coached speakers for major AI/ML events such as WWDC, GTC and re:Invent.

I currently work at Apple as an AI/ML Technologies Evangelist. I plan and lead the AI/ML developer content at WWDC, shape messaging for new frameworks and APIs, and coach speakers to tell their stories with clarity and impact. I also define and execute targeted App developer engagement plan to drive adoption of newly announced frameworks and APIs among top-priority app developers.

I believe the best developer programs are built on:

- The highest-quality technical education that reduces developer friction, no compromise.

- Tight feedback loops with developers and a relentless focus on developer productivity.

- Open ecosystem, community connection and sharing.

When I’m not writing, reading, or sharing what I learn, you’ll find me running 🏃 local streets and trails, testing the latest running shoes, reading running books, or brewing the perfect cup of coffee ☕️.

Featured Talks, Publications and Videos

I’ve spoken at events, conferences and meetups across the globe, including NVIDIA GTC

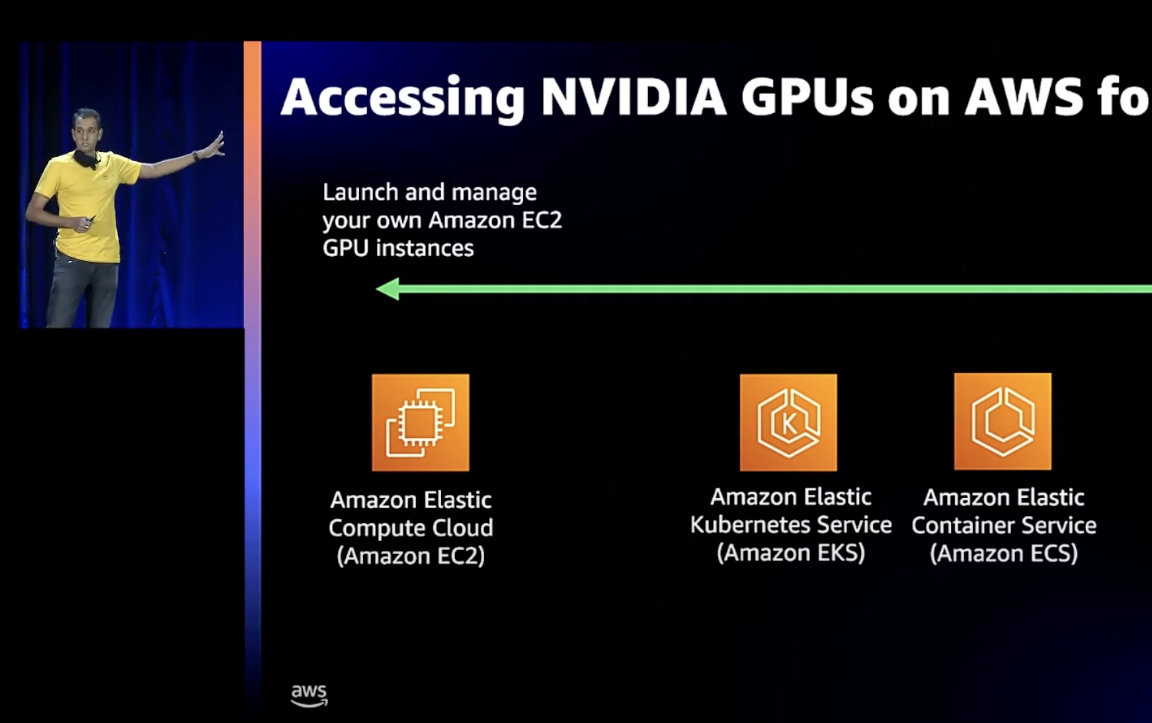

AWS re:Invent

Apple Developer Center Events

Open Data Science Conference (ODSC)

Docker events

Future Technologies Conference (FTC)

O’Reilly AI

AWS re:MARS

AWS Summits

AWS Innovate

Dev Days

Collision Conference

AI Accelerator Conf.

University & Community Meetups

and at numerous community meetups and university events.

You’ll find many of those talks, workshops, YouTube videos and published articles below.

Choosing the right GPU for deep learning on AWS (🔥150k+ views)

A complete guide to AI accelerators for deep learning inference (🔥50k+ views)

AI accelerators, machine learning algorithms and their co-design and evolution

How Docker Runs Machine Learning on NVIDIA GPUs, AWS Inferentia, and Other Hardware AI Accelerators

Deploying GPU-Optimized Machine Learning Models to the Cloud and the Edge

TensorRT 3: Faster TensorFlow Inference and Volta Support

Production Deep Learning with NVIDIA GPU Inference Engine

Deep Learning for Computer Vision with MATLAB and cuDNN

Deep Learning for Object Detection with DIGITS

GTC: A Developer’s Guide to Choosing the Right GPUs for Deep Learning

GTC: A Developer’s Guide to Improving GPU Utilization and Reducing Deep Learning Costs

GTC: Improve ML Training Performance with Amazon SageMaker Debugger

Accelerating Data Science with NVIDIA RAPIDS on AWS

GTC: GPU-Accelerated Deep Learning at Scale with TensorFlow, PyTorch, and MXNet in the Cloud

Why use Docker containers for machine learning development?

Introducing Amazon SageMaker Components for Kubeflow Pipelines

A quick guide to distributed training with TensorFlow and Horovod

Choose the right instance for inference deployment with SageMaker Inference Recommender

Speeding up deep learning training with SageMaker Training Compiler

Introduction to Amazon SageMaker Serverless Inference | Concepts & Code examples

Get access to FREE GPU-powered JupyterLab based IDE for ML with Amazon SageMaker Studio Lab

Machine Learning with Containers and Amazon SageMaker

Keynote: How machine learning is making customer experience more human

Using Containers for Deep Learning Workflows

Train Deep Learning Models on GPUs using Amazon EC2 Spot Instances (outdated approach)

7 things you should know about AI & Machine Learning launches at re:invent 2021

How Pytorch 2.0 accelerates deep learning with operator fusion and CPU/GPU code-generation

Machine learning with AutoGluon, an open source AutoML library

Introduction to TorchServe, an open-source model serving library for PyTorch

Deploying PyTorch models for inference at scale using TorchServe

A quick guide to managing machine learning experiments

How to scale machine learning experiments

How to debug machine learning models to catch issues early and often

An easy introduction to Mojo🔥 for Python programmers

Implementing NumPy style matrix slicing in Mojo🔥

How to setup a Mojo🔥 development environment with Docker containers

Introduction to Tensors in Mojo🔥

Using the Mojo 🔥 Visual Studio Extension 🚀

Using Mojo🔥 with Docker containers

Getting started with the Mojo SDK🔥

Speeding up Python code with Mojo🔥: Mandelbrot example

What’s New in Mojo 24.4: Improved Collections, New Traits, OS Module Features, and Core Language Enhancements

Fast K-Means Clustering in Mojo: Guide to Porting Python to Mojo for Accelerated K-Means Clustering

What’s New in Mojo 24.3: Community Contributions, Pythonic Collections, and Core Language Enhancements

Row-Major vs Column-Major Matrices: A Performance Analysis in Mojo and NumPy

What’s New in Mojo 24.2: Mojo Nightly, Enhanced Python Interop, OSS Stdlib, and More

Deploying MAX on Amazon SageMaker

Mojo Pi: Approximating Pi with Mojo Using Monte Carlo Methods

Evaluating MAX Engine Inference Accuracy on the ImageNet Dataset

Optimize and Deploy AI Models with MAX Engine and MAX Serving

Getting Started with MAX Developer Edition

What Are Dunder Methods? A Guide in Mojo

Mojo & Python: Calculating and Plotting a Valentine’s Day Using Mojo and Python

What Is Loop Unrolling? How You Can Speed Up Mojo

Mojo SDK v0.7 Now Available for Download

Apple livestreams

Code along with the Foundation Models framework | Meet with Apple

🎥 Mojo🔥 livestreams

Modular Community Livestream - New in MAX 24.4

Modular Community Livestream - New in MAX 24.3

Modular Community Livestream - New in MAX 24.2

Modular Community Livestream - MAX⚡️Developer Edition!

Modular Community Livestream - Mojo🔥 SDK v0.7 edition!

Modular Community Livestream – ModCon recap + Q&A

Modular Community Livestream - Mojo🔥 SDK v0.5 edition!

Modular Community Livestream - Mojo🔥 on Mac

Modular Community Livestream - Mojo🔥 SDK

Modular Community Q&A Livestream

🎥 PyTorch livestreams

PyTorch 2.0 Live Q&A Series: PyTorch 2.0 Export

PyTorch 2.0 Live Q&A Series: A Deep Dive on TorchDynamo

PyTorch 2.0 Q&A: Deep Dive into TorchInductor and PT2 Backend Integration

PyTorch 2.0 Q&A: Optimizing Transformers for Inference

PyTorch 2.0 Q&A: Dynamic Shapes and Calculating Maximum Batch Size

PyTorch 2.0 Q&A: TorchRL

2-D Parallelism using DistributedTensor and PyTorch DistributedTensor

🛠️ Workshops

A Tour of PyTorch 2.0

PyTorch Distributed Training on AWS

📣 Announcements & Company News

Key announcements from ModCon 2023

What’s new in Mojo SDK v0.5?

Mojo🔥 is now available on Mac

What’s the difference between the AI Engine and Mojo?

Modular to bring NVIDIA Accelerated Computing to the MAX Platform

Modular partners with Amazon Web Services (AWS) to bring MAX Enterprise Edition exclusively to AWS services

ModCon 2023 sessions you don’t want to miss!